Disguising LED Virtual Production Stages

Disguise is the platform for creatives and technologists to imagine, create and deliver spectacular visual experiences. fxguide covered their work on the film Solo: A Star Wars Story. The team at Lux Machina used the disguise specialist projection mapping software to handle the immersive environment created by the virtual sets. disguise software was used on Solo, as the VFX supervisor Rob Bredow wanted to make sure they had the absolute best image quality with projected imagery outside the windows of the Millennium Falcon cockpit set. Much as we are seeing today with LED sets, Solo used projector screens to provide contact lighting on the actors and capture the screens as part of the main camera filming, thus avoiding any keying or need for green screens.

The company has a long track record for being used in both theatre and concerts for producing complex projection experiences. “We make hardware and software products that are used to imagine, create, design and then deliver big, complex video based shows, experiences or production environments.” explains Tom Rockhill, CSO at disguise. Their combined H/W and S/W solutions have been used recently for concerts by Beyoncé, Justin Timberlake, Taylor Swift, who have all been touring with disguise driven stage sets. They have also produced complex visuals studio installations in theatres in the West End and on Broadway for shows such as Dear Evan Hansen, The Lehman Trilogy, Frozen, The Musical, and Harry Potter and the Cursed Child. “All of these shows are now using projection elements and we sit at the heart of that kind of storytelling technology stack,” he adds. Prior to current restrictions on events, the software was being deployed routinely for camera mapping video projection on the outside of large buildings and at sporting events. The xR (extended reality) feature set within the Disguise technology, has powered real-time video elements (shown below running in Notch) in performances such as Dave’s performance at the Brit Awards in the UK, where his Psychodrama won Album of the Year.

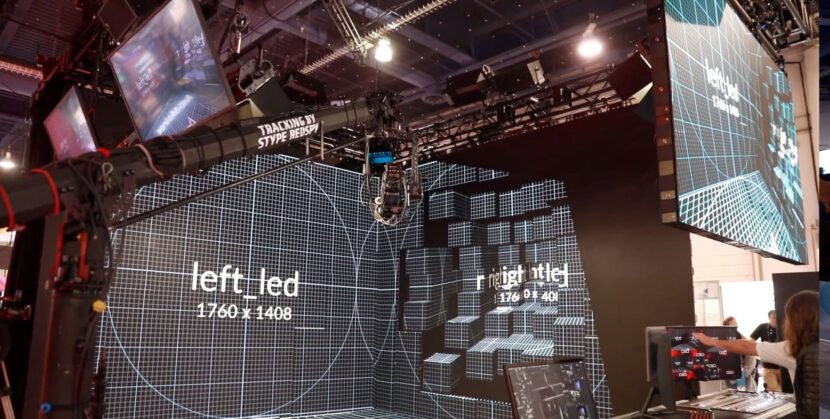

Prior to the COVID lockdown, disguise had already started to focus more on LED technology, but once the Lockdown occurred this accelerated. disguise has over 2000+ artists and projects using their disguise Live Experience Platform, which combines their own S/W and H/W with technology partners such as Epic Games (UE4), Unity, and NCam. Everything starts with their S/W which both simulates the projection by allowing complex building plans of sets or LIDAR scans of existing sets to be imported and then mapped. The mapping virtualizes the camera allowing seamless integration of practical and digital elements.

For example, a studio set may have large LED screens that sit at various angles. The software will work out how to distribute footage to all those screens so that the image looks perfectly aligned from the film camera’s point of view. It further allows for a digital set extension to extend out from those physical screens if the camera is wider than the practical set. The net result is that from the camera’s point of view the actors are on one seamless giant set, which is being updated constantly in real-time to provide a vast virtual set. To the viewer, everything is matched perfectly to the physical space so that actors close to the real screens have the correct contact lighting, but the scale of the virtual set can be the size of a stadium.

The ‘Designer’ software does this by allowing very specific details of either projectors or LED screens to be loaded with it then simulates as part of its pre-visualization process. But disguise it just builds a model during pre-production so that a presentation or live virtual set can be correctly mapped and conceptualized. “It is a pixel perfect simulator, so you know, that every clip of video, or real-time imagery that you’ve brought in and matched inside of our software, will mean the right pixel will be pushed to the correct LED every single time,” explains Rockhill. “Accounting for even the exact pitch of the LED screens”. As the software was designed in large part for concerts initially, the software also allows props and physical items in the set to be accounted for.

xR allows designers to blend virtual and physical worlds together using Augmented reality (AR) and Mixed Reality (MR) in live production environments to create immersive experiences, ”

The company has seven different offices and 400 + partners who can tap into over 1000+ trained operators, so the company is well placed to facilitate an end to end solution to independent producers who are keen to use an LED stage but need a validated team to bid, install and operate a stage. The Hardware varies between high-end media servers for pre-recorded footage to large GPU servers.

Rockhill explains that the xR disguise offering is “really five things:

1. in-camera screens., or LED screens behind the artist or actor,

2. it’s camera tracking,

3. it’s generative or real-time content,

4. it is augmented reality. And then finally,

5. it’s the disguise workflow, which packages it all up and makes it really easy to manipulate and produce something visually amazing”.

For example, the first major awards show in the US to be broadcast since the pandemic was held at the end of August when the ‘socially distanced’ MTV Video Music Awards were telecast from New York. XR Studios selected the disguise xR workflow, as the show’s central technology hub to help create a virtual stage where the awards presentation took place. Additionally, XR Studios produced and enabled creative teams to craft performances for multiple artists such as Lady Gaga, in a UE4 live performance, in an Extended Reality environment.

xR is now being deployed beyond broadcast to film production, We think in about 2 months we will have about 100 LED stages built using xR around the world,” estimates Rockhill. At the time of this interview, there were 51 built with another 23 being built and 33 being planned and scheduled.

The disguise xR most often uses UE4, but on top of Unreal they also provide spatial and color calibration to allow for seamless camera movement and LED set extensions. Render Engine Synchronization that simplifies the process of locking the moving camera to the set. A very well developed pre-viz software environment and solid hardware servers to provide the scalable real-time rendering.

Currently, they are working with High Res and DNEG on the new David S. Goyer Foundation series for Apple TV+. On this project, High Res specified the screen systems including the disguise VX4 servers to manage the LED panels displaying a 10-bit workflow for accurate lighting, reflections, and rich final pixels to be captured in-camera.

Technical Details

Latency

Latency through any system such as xR is key, if the delay from the camera moving to the background updating is noticeable, the illusion is broken, and the camera operator appears to ‘overshoot’ the background. 10 frames latency is fairly much the industry standard for professional stages. This is made up of at least 2 to 3 frames in solving the camera position with the studios capture volume software. Once the camera tracking is solved the system must update the software camera’s position, render the new frame, encode and transmit it and have the hardware LED update. Currently, the most popular method of transmission is NDI (Network Device Interface) due to the limitation of network hardware in most systems which is 10G. The encoding and transmit process adds 2 to 3 frames to the total latency. NDI is not old tech, it is considered an open technology that revolutionized the broadcast industry providing high-quality low latency efficient encoding in the last 5 years, – but it is not fast enough for virtual production.

As disguise controls the whole process their process can transmit uncompressed images (4K DCI @ 60 fps in 12-bit RGBA) via a 25G network (which is datacenter level networking). And by managing and tuning the CUDA memory transfer, the disguise team are already at 1 frame for this stage and they are working on making this subframe speed in the near future. In a world of 9 to 10 frame latency reducing the process by 2 frames is both significant and highly desirable for high-end productions. xR can operate in nonstandard resolutions and formats, 10-bit, 12-bit even 16-bit in YUV or RGB format this allows them to push fidelity of the imagery and still provide low latency. VP LED latency limits on set panning and tracking speeds in ways most DOPs would love to see solved.

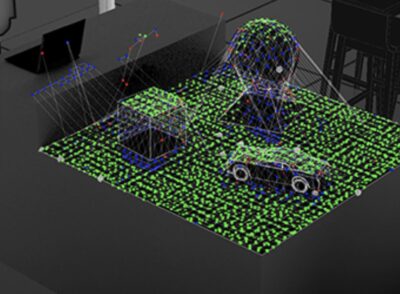

Spatial Mapping

Included as part of disguise’s suite of tools are projection mapping calibration tools such as OmniCal, which is a Structured Light tool which takes the base CAD setup of any studio build and then uses structured light patterns to calibrate the exact relationship between LED surfaces, the camera projection mapping projectors and the camera. The captured data is used to construct and update a highly accurate 3D representation of the studio as effectively a point cloud. This ability, with specialist hardware, to calibrate a 360 degree projected environment, and accurately provide data for the localisation of the camera in the 3D studio volume, is an example of the value-added services provided by a team that is delivering an end-to-end VP solution.

Dynamic projection volume and calibration

The round tripping on setting up the spatial mapping can lead to downtime with having to re-calibrate the set. “What we are moving towards is that we do that initial base recalibration, but then from that base we can alter some of the parameters in real-time, so the system is making observations and adjustments. One of the biggest problems we have is that you get everything set up and then some extra walks by and smacks something or bumps the camera by accident and then everything’s just a bit out of alignment because the tolerances on these things are very tight,” explains Ed Plowman, CTO at disguise. “This is what we’re trying to overcome with a mixture of really good well-grounded initial calibration, and then real-time compensation.

Variation in Screens

While a pitch of 2.8 is perhaps the most common LED screen specification at the moment on a typical 10m x 5m LED film set, stages vary, and even on a single-stage, there is variation. A stage may not have consistency in their LED pitch and density of panels due to weight and other factors. The ideal is to be able to compensate for this by mapping the volume not just outputting to the LEDs as simple screens. “The ultimate end goal for us is to get to a point where, because we understand the spatial mapping, and we’ve done registration and calibration properly with structured light observations directly from the panels themselves, – and we understand the dot pitch/LED density, – that we can compensate to get to get a uniform output across the stage,” says Plowman. “Because we’re not doing output to ‘a display’, we’re doing spatial mapping in UV space where the emissions of points of light actually are; – we can compensate for the fact that you have a lower density in certain panels, higher density in others but you still get a uniform output.”

Colour Space

sguise is also very aware that the post-production effects team and the final grading of the film are very sensitive to the color spaces used on set. They have been working with both EPIC and the UE4 team and manufacturers of LED screens to “exploit the dynamic range beyond standard color space (Rec2020) and get the DOP, the sort of analog dynamic range that they would have had around 35mm film, which we’ve slowly been sort of losing and having to add back in postproduction,” Plowman elaborates. In reality onset, there is the color space that represents what the camera can record, a color space that the LED walls can output and the abstract color space that the renderer works in, both live and later in post. disguise is working to expand the notional intersection of these various color spaces and provide a wider, more dynamic color space, which is stable and calibrated. Not only that but the system would allow for the fact that the recorded color space is not fixed, Aperture, ISO, Resolution, lenses, and other factors can all affect the color or rather the way the CMOS sensor records color information. This becomes vital as props and partial sets inside the LED stage may need to match to set extensions in the LED real-time rendered computer graphics and the same ‘object’ real and digitally extended, must have the same tone, color values as perceived by the viewer.

End game: Lightfields

There is little doubt the endpoint for disguise’s R&D is taking an LED stage or a new variation of it, to the point of being a Lightfield. Especially as the Unreal Engine is already able to do real-time ray tracing, – so in a sense it is already possible to understand how light varies across the volume of the stage. The technology does not exist yet, but until then the team is keen to provide the most accurate, well-calibrated, and complete solution to high-end seamless virtual production in an LED volume.

Article content ref by: fxguide.com